Integrating Hippo CMS with elasticsearch Engine

Woonsan Ko

2015-01-23

Many organizations are increasingly adopting open source search engines as their integrated platform to provide massive search query requests for their various content sources such as databases, elastic intranets and CMS repositories. ElasticSearch is one of the hottest open source (enterprise) search engines nowadays.

With regard to Hippo CMS, in many use cases, people want to index documents in the enterprise search engine whenever documents get published in Hippo CMS, and update or delete the indexed documents whenever the documents get depublished. So they will be able to use the Enterprise Search frontend application for various content sources and web applications.

Hippo CMS is very flexible, and so perhaps you can think ahead and write code for a solution very quickly with Hippo Event Bus. OK. I'll write an event listener and register it with a DaemonModule. It should work, right?

Well, problems are sometimes not as easy as you thought initially. You want to Always Be Closing the issues. But if you don't think thoroughly about the problem and evaluate candidate approaches, it might end up with an Always- Being- Chaotic situation on production, and maybe you can feel as bad as Always Being Crushed. Have I got your attention? :-)

In this article, I will describe the problem first and discuss approaches and solutions with a real demo project.

Problem that Matters

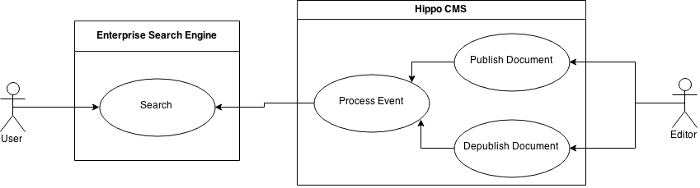

All right. What's the problem that really matters? The problem we want to solve here is to integrate Hippo CMS with an ElasticSearch enterprise search engine. Hippo CMS is required to update search index data based on document publication/depublication events mostly, as shown in the diagram below.

If you implement an event listener and register it in Hippo Event Bus, then it should look like almost the same as the diagram shown above as well. The event listener can probably invoke the external search engine (e.g, through either REST service or direct API call) directly. However, that approach brings some architectural questions immediately as follows:

- If the external system becomes unavailable at some moment for some reasons, then it may lose event messages. How can we guarantee all the events processed or at least keep failed messages somewhere? Reliability matters here.

- If invocations on the external search engine may take longer than expected at some moment for some reasons, then it may affect the performance (e.g, response time) in the CMS Frontend Application and the user experiences as well. How can we avoid this? Performance matters, too.

- We would like to focus only on our business logic instead of spending time on learning and maintaining Hippo Event Bus event listeners implementation in Java ourselves. Also, we might want to be able to change the event processing steps much faster in the future (e.g, get the job done within less than 2 hours to add e-mail notification task in the event processing steps). Modifiability matters.

- We would like to monitor the event processing and be able to manage it (e.g, turning it on/off). If we implement event listener Java code ourselves for the features, then it would increase complexity and cost a lot. How can we achieve that? Manageability also matters.

- and maybe more...

Thinking in ABC

There's another ABC in the software architecture field. Architecture Business Cycle, meaning the relationships between business and architecture form a cycle with feedback loop that used to handle growth and system growth. Whatever it means, the important thing here is, you as architect should understand requirements and quality attributes (reliability, performance, modifiability, manageability, time-to-market, etc.) to give a good solution architecture and to let it implemented properly for business to keep growing.

The clip on the right is from Glengarry Glen Ross (film) in which Alec Baldwin as Blake is shaking up the salesmen.

First of all, let's think about the Reliability requirement mentioned above. You don't want to lose any messages even when the remote system becomes unavailable. So, we need to store the message to guarantee not to lose it and forward the message to the destination. Right! That's Store-and-Forward architectural pattern. That's what we need here. It will help solve the Performance requirement as well because the Store step finishes right away and Forwardstep gets done asynchronously so CMS Frontend Application wouldn't be affected in Performance and user experiences any more.

Next, let's talk about the Modifiability requirement. If you decide to write Java code from the scratch directly using Hippo Event Bus, then it might look okay at first. But what if you want to give a more balanced solution to meet the Manageability requirement as well? Shall I need to expose JMX myself to support manageability (e.g, start, pause and stop operations or other parameters)? Well, well, well... Let's try to find a good Open Source OTS (off-the-shelf) Software. Wow, luckily we have Apache Camel! It supports all different kinds of Enterprise Integration Pattern in simple DSL with a lot of built-in components and it is so lightweighte that it can be embedded almost everywhere! You can manage it at runtime (e.g, start, pause, stop and changing attributes and even steps) with Hawtio, too!

However, there's one missing piece. Which component can we use to receive Hippo Event Bus messages in Camel context? Don't worry. There's a Hippo Forge plugin for this: Apache Camel - Hippo Event Bus Support (which provides hippoevent: component for that)!

So you can simply define these to receive a Hippo Workflow Event only on 'publish' or 'depublish' events and store into a file in a route, for instance:

<route id="Route-HippoEventBus-to-File">

<!-- Subscribe publish/depublish events as JSON from HippoEventBus. -->

<from uri="hippoevent:?category=workflow&methodName=publish,depublish" />

<!-- Convert the JSON message to String. -->

<convertBodyTo type="java.lang.String" />

<!-- Store the JSON string to a file in the 'inbox' folder. -->

<to uri="file:inbox?autoCreate=true&charset=utf-8" />

</route>The demo project explained later will give a full example for you.

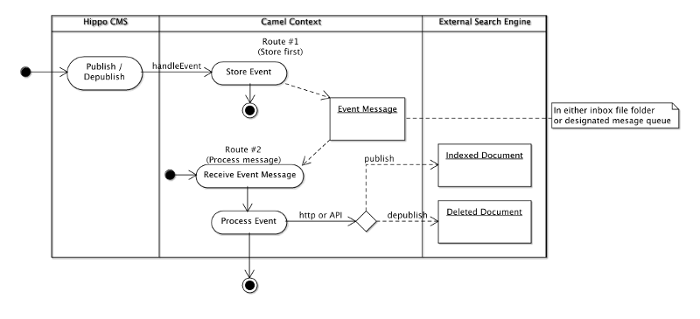

Anyway, let's brief our solution which should meet all the quaility requirements listed earlier:

- We will configure a Camel route with hippoevent: Apache Camel component in the Camel context. The component will receive all Hippo Event Bus messages.

- First store the messages to either file inbox folder or a dedicated message queue.

- We will configure another Apache Camel route with either file: or activemq: component to read stored messages and invoke the search engine service to update the search index.

Depending on your real use cases, you might want to configure more advanced routes (such as parallel, translation, etc), but the fundamental idea here is Store-and-Forward by leveraging Apache Camel, Camel components and Apache Camel - Hippo Event Bus Support.

An activity diagram would help understand this solution:

With this approach, you can fulfill the quality attributes:

- Reliability: even if the external system becomes unavailable at some moment for some reasons, you don't lose any event message because all the failed messages are stored under either the inbox file folder or the designated message queue.

- Performance: by separating the process to multiple routes ( Store-and-Forward ), you can let the second route poll or receive the message and process asynchronously. This minimizes the impact on Hippo CMS/Repository performance and user experiences.

- Modifiability: all the event processing is basically configured and executed by Apache Camel Context. You can configure any enterprise message integration patterns by leveraging various Apache Camel components. You can focus on your business logic.

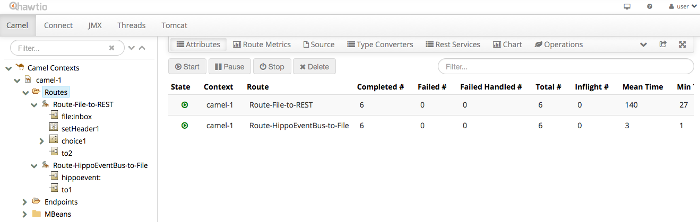

- Manageability: you can take advantage of Apache Camel monitoring tool such as Hawtio. You can monitor the process, pause, stop, start a route and even change pararameters at runtime.

Play with Demo Project

You can build and run the demo project with Maven and run the demo applications locally with Maven Cargo Plugin (Apache Tomcat embedded).

You can check out, build and run the demo application like the following:

$ svn co https://forge.onehippo.org/svn/camel-hippoevt/camel-hippoevt-demo/trunk camel-hippoevt-demo

$ cd camel-hippoevt-demo

$ mvn clean package

$ mvn -P cargo.run -Dcargo.jvm.args="-Dsearch.engine=es"

The demo project also contains other useful demo scenarios such as running with Apache Solr Search or Apache ActiveMQ. Please find more information on the demo project at https://bloomreach-forge.github.io/camel-events-support/.

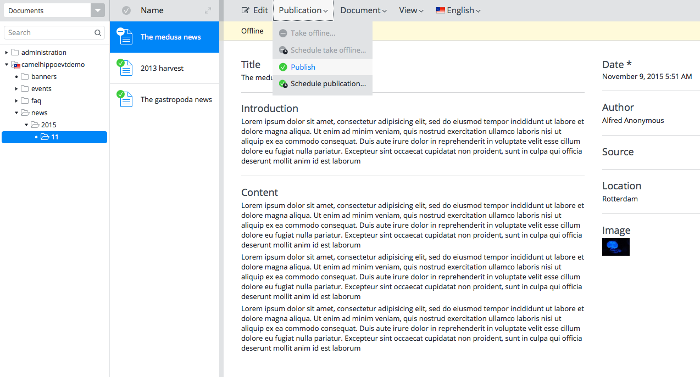

In CMS UI, try to open a document to take it offline and re-publish the document.

So, the document publication/depublication events must have been passsed to Camel routes.

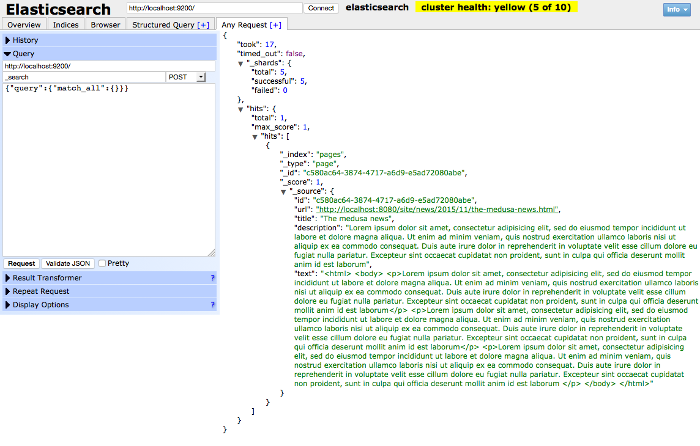

Now, try to search the content in the ElasticSearch frontend UI at http://localhost:9200/_plugin/head/.

Browse the auto-created index, "pages", and browse or search the content. You can even click on the "url" field value to visit the document SITE link.

Also, retry to take a document offline or publish the document again and again and see those are synchronized in the search engines properly.

If you visit http://localhost:8080/hawtio/, then you will be able to manage all the Camel components and routes at runtime. Isn't it great?

Conclusion

I have discussed the topics about the most common use cases of ElasticSearch integration with Hippo CMS, and the requirements and quality attributes which should really matter to business, architects and engineering. Based on that simple analysis, to fulfill all the quality requirements, I proposed a more reasonable and balanced solution which is leveraging Apache Camel, Camel built-in components and Apache Camel - Hippo Event Bus Support forge module.

The use cases we can support with this approach can be extended more. For example, you can apply this architectural approach to CDN integration, External Caching Service integration, etc.

Also, you will find more useful information and details on the website of Apache Camel - Hippo Event Bus Supportforge module. Always Be Checking out! Always Be Committed to qualities! :-)

You may also be interested in reading about how to Integrate Hippo CMS with Marketing Automation and Pardot